Stanford University, CA

M.S. Student, Electrical Engineering

Concentration: Robotics / Computer Vision

- GPA: N/A

- Duration: Sep. 2025 - Apr. 2027 (Expected)

🎉 UPDATE🎉

Efficient Construction of Implicit Surface Models From a Single Image for Motion Generation was accepted by ICRA 2026 and published to arXiv now!

I recently joined the Stanford Vision and Learning Lab (SVL), advised by Prof. Fei-Fei Li.

🌟 Highlight 🌟

Wayne dedicated much of his time to robotics and computer vision through projects, internships, and research. In the summer of 2023, he developed a project on dynamic obstacle avoidance using TurtleBot4 at UC San Diego, relying solely on depth camera inputs. His internship at ITRI further deepened his understanding of the challenges in bridging the Sim2Real gap, particularly in designing perception pipelines that remain robust in real-world conditions.

He conducted remote research in computer vision and robotics under the supervision of Prof. Weiming Zhi and Dr. Tianyi Zhang at the DROP Lab, CMU before beginning his studies at Stanford.

M.S. Student, Electrical Engineering

Concentration: Robotics / Computer Vision

Bachelor of Science in Interdisciplinary Program of Engineering

Concentration: Electrical Engineering / Power Mechanical Engineering

Researcher @ Stanford Vision and Learning Lab

Sep. 2025 - In Progress

Current Work:

1. Constructed real-to-sim-to-real pipeline to generate “controllable digital cousins” to close sim-to-real gap in robot learning policy

2. Collected data in reality and developed real-to-sim replay method

Automotive AI Algorithm Development Intern

Sep. 2024 - Nov. 2024, Mar. 2025 - Jul. 2025

We combined and re-annotated Mapillary and ADE20k datasets to fulfill requirements for quadruped robots and autonomous vehicles. It was then converted to COCO format for the training of custom Mask2Former model using NVIDIA TAO Toolkit. Lastly, the re-trained model was integrated into ROS2 for real-time video inference on robotic and vehicle platforms at about 15 FPS.

We built a real-time pedestrian detection and re-ID pipeline using YOLO11n and OSNet. The pipeline employed cosine similarity for feature matching and it exported models in .onnx and TensorRT .engine formats for model acceleration. We successfully achieved real-time inference at about 25 FPS for robotics and automotive use cases.

We developed an elevation mapping workflow for quadruped robots Unitree Go2 by integrating Gazebo and point clouds from Intel RealSense depth camera. Moreover, a point cloud sampling & processing pipeline is integrated with reinforcement learning gait models in simulation. The most challenging part is to employed visual inertial odometry (VIO) to resolve sensor interruptions and implemented forward kinematics to compute foot coordinates of Unitree Go2. Finally, the system was deployed on Unitree Go2 for Sim2Real validation.

Technology Innovation Group of Chairman Office Intern

Jun. 2024 - Sep. 2024

We designed an intuitive Android car app to control A/C temperature of the vehicle via voice. The pipeline was composed of Azure Speech Service → Dialogflow intent parsing → CarAPI to operate the HVAC system.

[video]

Mar. 2025 - Sep. 2025

[DROP Lab], Carnegie Mellon University

Advised by Dr. Tianyi Zhang, Prof. Matthew Johnson-Roberson, and Prof. Weiming Zhi

We present Fast Image-to-Neural Surface (FINS), a lightweight framework capable of reconstructing high fidelity signed distance fields (SDF) from a single image in 10 seconds.

Our method fuses a multi-resolution hash grid and efficient optimization to achieve state-of-the-art accuracy while being an order of magnitude faster than existing methods.

The scalability and real-world usability of FINS were also tested through robotic surface-following experiments, showing its utility in a wide range of tasks and datasets.

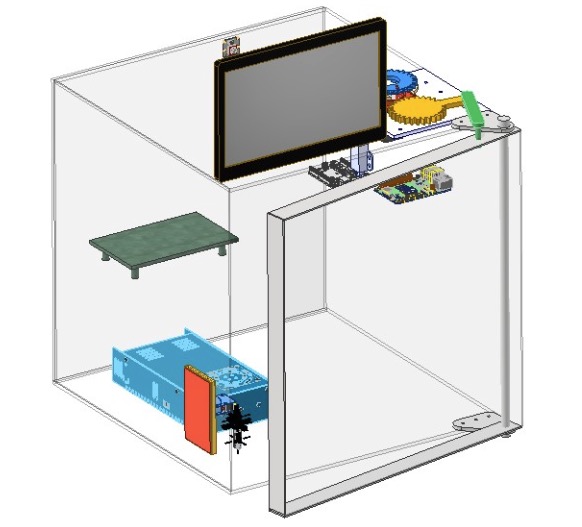

Jan. 2023 - Nov. 2023

[HSCC Lab], National Tsing Hua University

Advised by Jang-Ping Sheu.

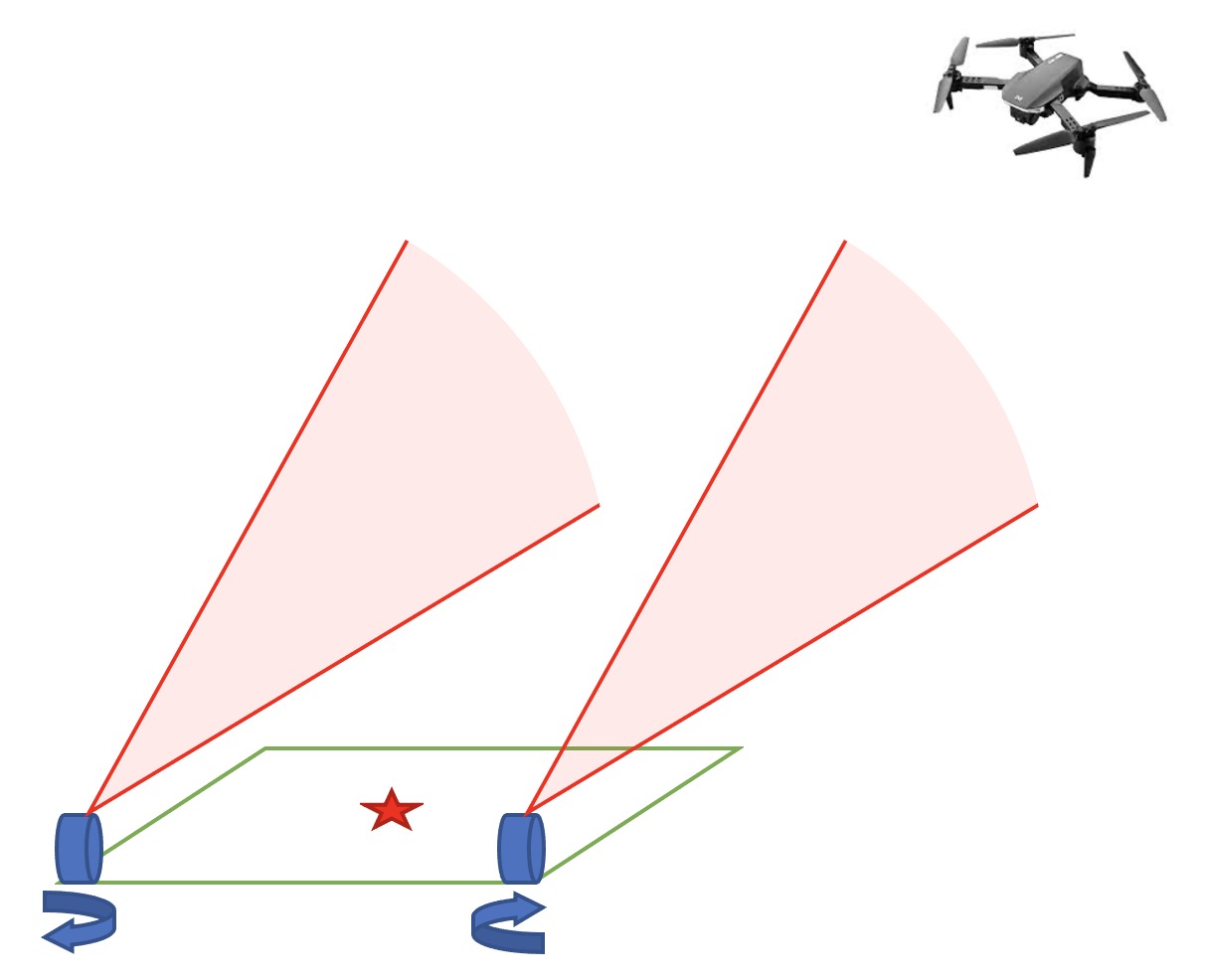

We propose a laser-assisted guidance approach to improve the landing accuracy of a drone where GPS creates a multi-meter error.

This system merges embedded electronics, 3D-printed mechanical design, and low-power laser sensing to achieve 30-40 cm landing accuracy

while also confirming the feasibility of laser-based localization for future unmanned aerial vehicle (UAV) applications that are either autonomous or remote.

Jun. 2023 - Aug. 2023

[MURO Lab], UC San Diego

Supervised by Jorge Cortés.

Implemented real-time dynamic obstacle avoidance for mobile robots using vision-based sensing and ROS2.

The system employed the Turtlebot4 equipped with an OAK-D Pro camera to achieve precise obstacle tracking and smooth, collision-free navigation.

It also integrated RRT* path planning and Bézier curve smoothing, demonstrating affordable and efficient vision-driven navigation in dynamic environments.

(† Corresponding author)

arXiv

[pdf]

@misc{chu2025efficientconstructionimplicitsurface,

title={Efficient Construction of Implicit Surface Models From a Single Image for Motion Generation},

author={Wei-Teng Chu and Tianyi Zhang and Matthew Johnson-Roberson and Weiming Zhi},

year={2025},

eprint={2509.20681},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2509.20681},

}

W.-T. Chu, T. Zhang, M. Johnson-Roberson, and W. Zhi, “Efficient Construction of Implicit Surface Models From a Single Image for Motion Generation,” arXiv preprint arXiv:2509.20681, 2025. [Online]. Available: https://doi.org/10.48550/arXiv.2509.20681

MASS 2024

[pdf]

@INPROCEEDINGS{10723664,

author={Kuo, Yung-Ching and Chu, Wei-Teng and Cho, Yu-Po and Sheu, Jang-Ping},

booktitle={2024 IEEE 21st International Conference on Mobile Ad-Hoc and Smart Systems (MASS)},

title={Laser-Assisted Guidance Landing Technology for Drones},

year={2024},

pages={670-675},

doi={10.1109/MASS62177.2024.00107}

}

Y. -C. Kuo, W. -T. Chu, Y. -P. Cho and J. -P. Sheu, "Laser-Assisted Guidance Landing Technology for Drones," 2024 IEEE 21st International Conference on Mobile Ad-Hoc and Smart Systems (MASS), Seoul, Korea, Republic of, 2024, pp. 670-675, doi: 10.1109/MASS62177.2024.00107

PMAE 2023 (The Best Oral Presentation Award)

[pdf]

@InProceedings{10.1007/978-981-97-4806-8_17,

author="Chu, Wei-Teng",

editor="Mo, John P. T.",

title="Dodging Dynamical Obstacles Using Turtlebot4 Camera Feed",

booktitle="Proceedings of the 10th International Conference on Mechanical, Automotive and Materials Engineering",

year="2024",

publisher="Springer Nature Singapore",

pages="195--204",

isbn="978-981-97-4806-8"

}

Chu, WT. (2024). Dodging Dynamical Obstacles Using Turtlebot4 Camera Feed. In: Mo, J.P.T. (eds) Proceedings of the 10th International Conference on Mechanical, Automotive and Materials Engineering. CMAME 2023. Lecture Notes in Mechanical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-97-4806-8_17